Running LLMs (Large Language Models) on a Windows machine, especially those with larger parameter counts, requires robust hardware. Monitoring the system’s performance while running LLM tasks is also important to avoid overheating and other performance issues. This article shows how to monitor GPU performance in Windows 11 for LLM tasks.

Monitor GPU performance in Windows 11 for LLM tasks

Understanding your system’s hardware metrics like CPU and GPU utilization, CPU and GPU temperature, memory consumption, etc., can help optimize workflows and prevent bottlenecks. These metrics can also help you diagnose and troubleshoot performance issues. Here, we will talk about how to monitor GPU performance in Windows 11 for LLM tasks.

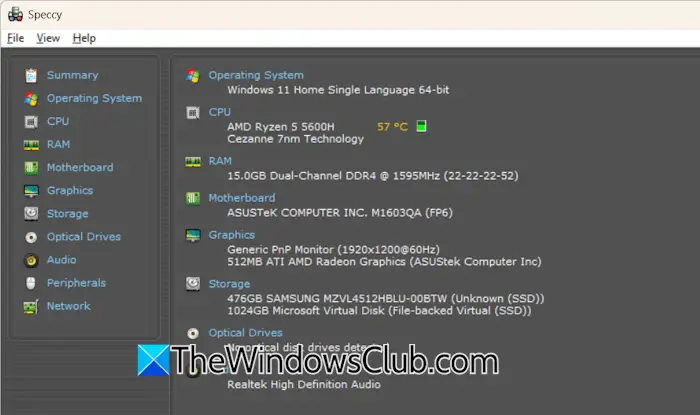

Speccy is a lightweight system information tool developed by CCleaner. It is specially designed to provide detailed hardware specs. Its user-friendly interface makes it suitable for system performance monitoring for both casual and technical users.

Key features of Speccy

Let’s look at some of Speccy’s key features.

1] Static GPU information

Speccy gives you detailed static GPU information, including your GPU vendor name, model number, driver version, dedicated VRAM, etc. This information is helpful when you want to upgrade your system or learn more about your computer graphics.

It is also a useful tool for verifying compatibility with local LLM hardware requirements, as it provides detailed information about your system’s hardware. LLMs with large parameters require robust hardware when running them locally on your Windows machine. Speccy helps you know the detailed hardware specifications of your GPU so you can determine whether your system is capable of running large language models.

Real-time monitoring

Thermal Throttling is a safety mechanism in computer systems that helps prevent permanent damage to the CPU and GPU due to overheating. When the CPU or GPU reaches the thermal limit, the system automatically decreases the clock speed of the CPU and GPU to protect them from permanent damage. This results in a drop in the system’s performance.

You can prevent Thermal throttling by monitoring the temperature and installing a good cooling system on your computer. Speccy’s Real-time monitoring feature allows you to monitor the GPU’s real-time temperature, so you can take required measures if your GPU temperature goes above a certain limit during prolonged LLM tasks.

The Real-time monitoring feature is available only in the Pro version of Speccy. Speccy does not display GPU utilization in percentage or load metrics, which limits its performance insights.

Additional features

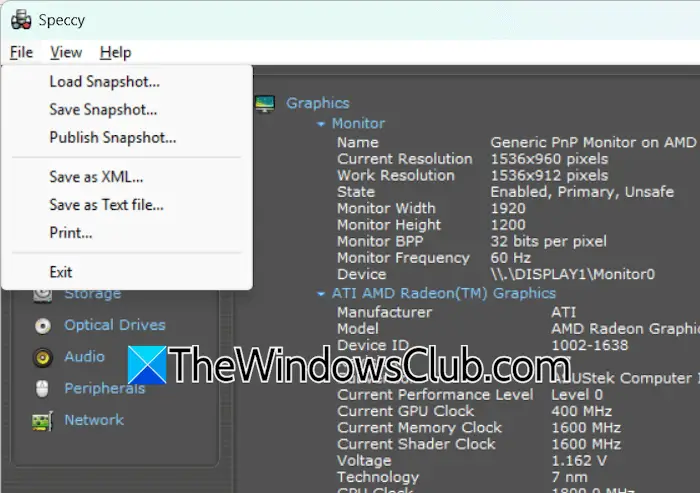

Speccy also provides some additional features, such as System Snapshot. This feature allows you to take and share a snapshot of all your system’s hardware specifications. To take System Snapshot, go to File and click Save Snapshot. You can also save your system’s specifications as a Text file.

Speccy Pricing

Speccy is available in Free and Pro versions. The Free version can be downloaded from the website but has some limitations. Users can view basic hardware specs and static GPU data, but no real-time monitoring is available in the Free version.

Speccy Professional provides you with the following features:

- Advanced PC insights

- Automatic updates

- Real-time data monitoring

- Premium support

The Professional version of Speccy is available at $19.95/year. You can get more information on the pricing structure of Speccy on its official website.

The usefulness of AI LLM performance monitoring

Let’s talk about the usefulness of performance monitoring while running Large language models.

1] VRAM tracking

Large Language Models are massive, as they have millions and billions of parameters. These parameters need to be loaded into memory for inference. GPU VRAM is specially designed to handle this task more efficiently than a standard system RAM. VRAM tracking is critical for large models, ensuring users avoid exceeding memory limits.

2] Temperature alerts

Thermal throttling saves the CPU and GPU from permanent damage by reducing the clock speed when the temperature goes beyond safe levels. However, decreasing the clock speed also affects the system’s performance. Speccy provides temperature alerts to mitigate the risk of thermal throttling during intensive LLM inference tasks.

3] Quick step verification

Speccy can help you with quick setup verification of a large language model. You can confirm your GPU specs (e.g., NVIDIA compatibility, VRAM size) before deploying a model on your machine.

Closing words

Running a Large Language Model on a Windows machine requires robust hardware. Speccy helps you determine whether your system is capable of running the required parameter of an LLM.

Leave a Reply